Backup failures and SSHFS

Initial investigations

My healthchecks instance informed me that my Borg backup cronjob on the system-apps VM was failing. Taking a look at it manually, I see the following:

root@system-apps:~# borgmatic -v1

/etc/borgmatic/config.yaml: Parsing configuration file

/mnt/freenas/Backups/borg: Pruning archives

Local Exception

Traceback (most recent call last):

File "/usr/lib/python3/dist-packages/borg/archiver.py", line 4455, in main

exit_code = archiver.run(args)

File "/usr/lib/python3/dist-packages/borg/archiver.py", line 4387, in run

return set_ec(func(args))

File "/usr/lib/python3/dist-packages/borg/archiver.py", line 139, in wrapper

with repository:

File "/usr/lib/python3/dist-packages/borg/repository.py", line 189, in __enter__

self.open(self.path, bool(self.exclusive), lock_wait=self.lock_wait, lock=self.do_lock)

File "/usr/lib/python3/dist-packages/borg/repository.py", line 392, in open

self.lock = Lock(os.path.join(path, 'lock'), exclusive, timeout=lock_wait, kill_stale_locks=hostname_is_unique()).acquire()

File "/usr/lib/python3/dist-packages/borg/locking.py", line 350, in acquire

self._wait_for_readers_finishing(remove, sleep)

File "/usr/lib/python3/dist-packages/borg/locking.py", line 363, in _wait_for_readers_finishing

self._lock.acquire()

File "/usr/lib/python3/dist-packages/borg/locking.py", line 140, in acquire

with open(self.unique_name, "wb"):

OSError: [Errno 5] Input/output error: '/mnt/freenas/Backups/borg/lock.exclusive/system-apps.hosts.lan@46333701320834.18075-0'

Platform: Linux system-apps 4.19.0-11-amd64 #1 SMP Debian 4.19.146-1 (2020-09-17) x86_64

Linux: debian 10.6

Borg: 1.1.9 Python: CPython 3.7.3

PID: 18075 CWD: /root

sys.argv: ['/usr/bin/borg', 'prune', '/mnt/freenas/Backups/borg', '--keep-hourly', '24', '--keep-daily', '7', '--keep-weekly', '4', '--keep-monthly', '6', '--keep-yearly', '1', '--prefix', '{hostname}-', '--stats', '--info']

SSH_ORIGINAL_COMMAND: None

Command '('borg', 'prune', '/mnt/freenas/Backups/borg', '--keep-hourly', '24', '--keep-daily', '7', '--keep-weekly', '4', '--keep-monthly', '6', '--keep-yearly', '1', '--prefix', '{hostname}-', '--stats', '--info')' returned non-zero exit status 2.

Need some help? https://torsion.org/borgmatic/#issues

Repeating this cronjob shows inconsistent failure location: sometimes it occurs during the prune operation, sometimes during the check operation, but invariably while acquiring its lock.

I have an open issue from a month back in my GitLab instance identifying the first instance of the error. I had flagged it as “non-breaking bug” as it only occurred ~5% of the time. Clearly something’s changed now that it’s happening ~95% of the time.

- Backups might have grown to a point that the connection lasts longer, exposing an otherwise hidden connectivity bug.

- I recently replaced the Raspberry Pi bridging wifi dongle (moving from 2.4 GHz to 5 GHz) which required new drivers.

- I’m not seeing errors as frequently on other VMs, which suggests it might be specific to system-apps.

Approach 1: SSHFS mount options

There’s a relevant discussion in the Borg backup repo

that suggests using mounting options reconnect,cache=no,noauto_cache,entry_timeout=0 to resolve the

issue. Mucking around with fstab, remounting, re-running borgmatic, and this investigation path proves

fruitless. The commenters don’t seem quite sure how the options are actually impacting SSHFS and I

don’t have time to dig.

Approach 2: Race condition

I know I’m likely going to replace SSHFS with NFS or SMB for reasons mentioned below, but as a quick

stab in the dark, let’s note that the script always fails at locking.py:140. Maybe there’s a subtle

race condition in SSHFS? I try introducing a short sleep between the creation of self.path and

self.unique_name. If it works, great. I save myself the effort of having to edit all my /etc/fstab

files. If not, it’s only a few minutes of testing.

def acquire(self, timeout=None, sleep=None):

if timeout is None:

timeout = self.timeout

if sleep is None:

sleep = self.sleep

timer = TimeoutTimer(timeout, sleep).start()

while True:

try:

os.mkdir(self.path)

except FileExistsError: # already locked

if self.by_me():

return self

self.kill_stale_lock()

if timer.timed_out_or_sleep():

raise LockTimeout(self.path)

except OSError as err:

raise LockFailed(self.path, str(err)) from None

else:

+ sleep(1)

with open(self.unique_name, "wb"):

pass

return self

Misleadingly, this worked the first time I tested after making this change. Unfortunately, I think this was just luck as subsequent attempts all failed in the same location. Playing around with the sleep duration in the false hope this was related was likewise fruitless. Change reverted.

Approach 3: Ditch SSHFS

Searching around, I find other complaints about SSHFS that make me consider using NFS or SMB instead. I initially went with SSHFS as that’s what the Borg documentation recommends and it affords me the ability to use public key login. But if there are stability issues, perhaps I take a look at my other options.

Approach 3A: Install Borg directly on FreeNAS

Borg has the capacity to run remote repositories via SSH, but this requires the installation of Borg on the remote host (FreeNAS). While Borg does have FreeBSD releases, I’m reluctant to install any non-isolated third-party code on to what might be my most critical host. I’d honestly rather deal with spotty backups over SSHFS than expose myself to this kind of unnecessary risk.

Approach 3B: Use SMB or NFS instead

Neither SMB nor NFS support public key logins, which while understandable is a frustrating choice between strong authentication and stable backups. On the upside, FreeNAS does support subnet restrictions for both SMB and NFS shares, which will be sufficient in my context. Noting that SMB is single-threaded, I head in the direction of NFS with the intent of circling back to SMB if NFS doesn’t work out.

- In FreeNAS, I set up the NFS shared paths.

- Mounting the shares, permission issues indicate that I also need to set up FreeNAS’

Maproot UserandMaproot Groupoptions. Fixed. - Trying again, I find that some of my paths are empty.

A few moments go by before I realize it’s specifically any nested datastore.

- Investigation shows this is an NFS limitation with discussion back in 2009.

- I try using FreeNAS’

All dirsoption and mounting the paths separately, but apparently “all dirs” doesn’t cross datastores. - An easy solution is to share each FreeNAS datastore separately. Somewhat irritating, but it’s a one-time setup.

- With the mounts functional, I run the backup script again and find it working. Moreover, it’s working a lot faster than before.

Closing thoughts

- Backups are now functioning reliably (the core problem has been solved).

- Performance has shot up (unexpected considering posts like Jake’s NAS Performance analysis don’t suggest this level of performance boost).

- Authentication moved from public key to subnet restriction (less happy about this).

- It might be worth investigating Kerebos to recover some measure of authentication security, but that’s an experiment for another day.

- I’m still unclear as to why the problem reared its head when it did. I suspect moving away from the wifi bridge when we eventually have the space will resolve the SSHFS issue, but that’s just conjecture.

Edit from the future

It seems as though even with NFS, the problem rears its head again. The intermediary solution I’ve put in place is to run the Borg backups directly within the VM and to rsync the backups to FreeNAS on a nightly basis. This greatly speeds up the backup process itself and separates the “do the backup” from the “store the backup remotely” tasks in two. While VM snapshots are now larger, I regain public key authentication and efficient consistency checking. A trade I’m happy to make.

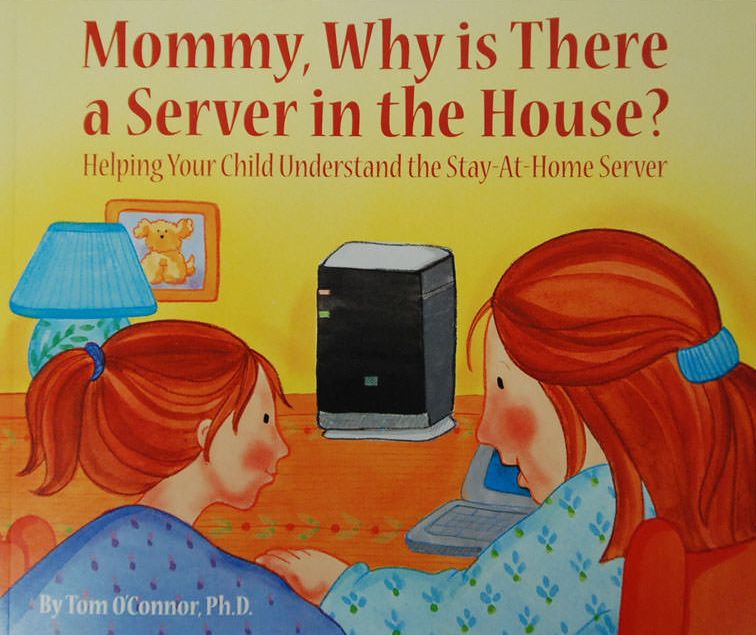

The long-term solution is to move the FreeNAS on to ethernet and avoid this whole hassle. I’m starting to considering drilling holes through a few walls to make that doable. Alternativelty, what could possibly go wrong with putting a server in a nursery?